NExT-QA: Next Phase of Question-Answering to Explaining Temporal Actions

|

|

|

|

|

Introduction

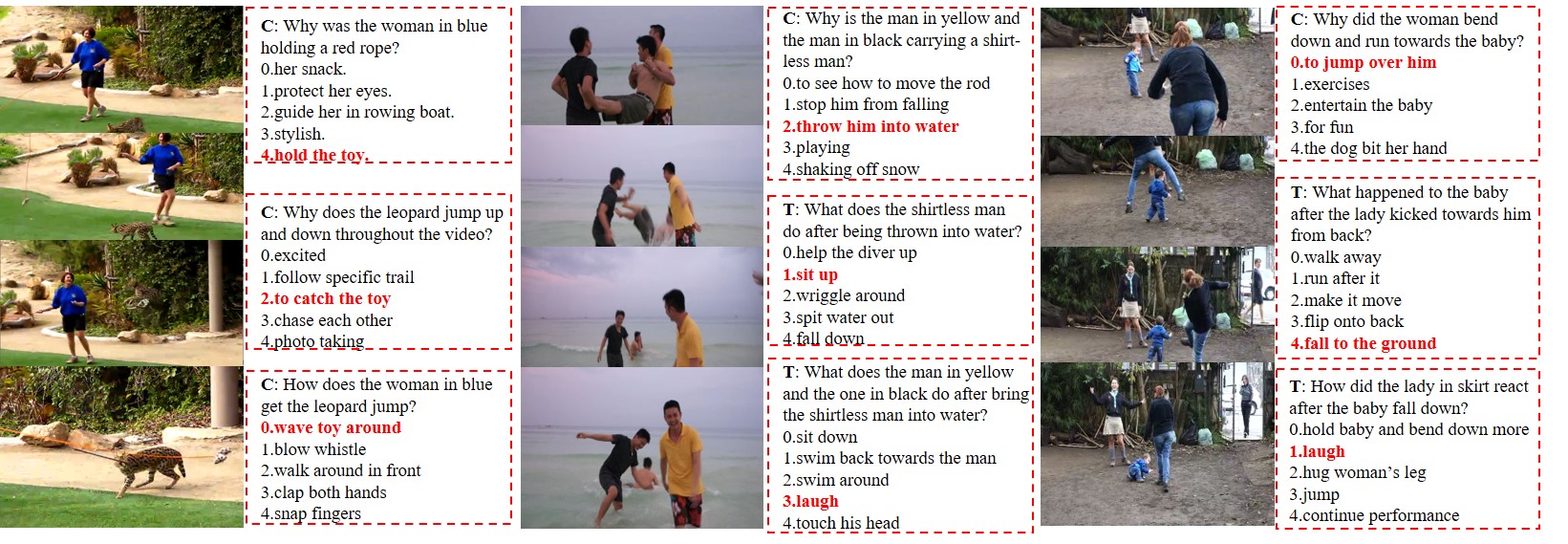

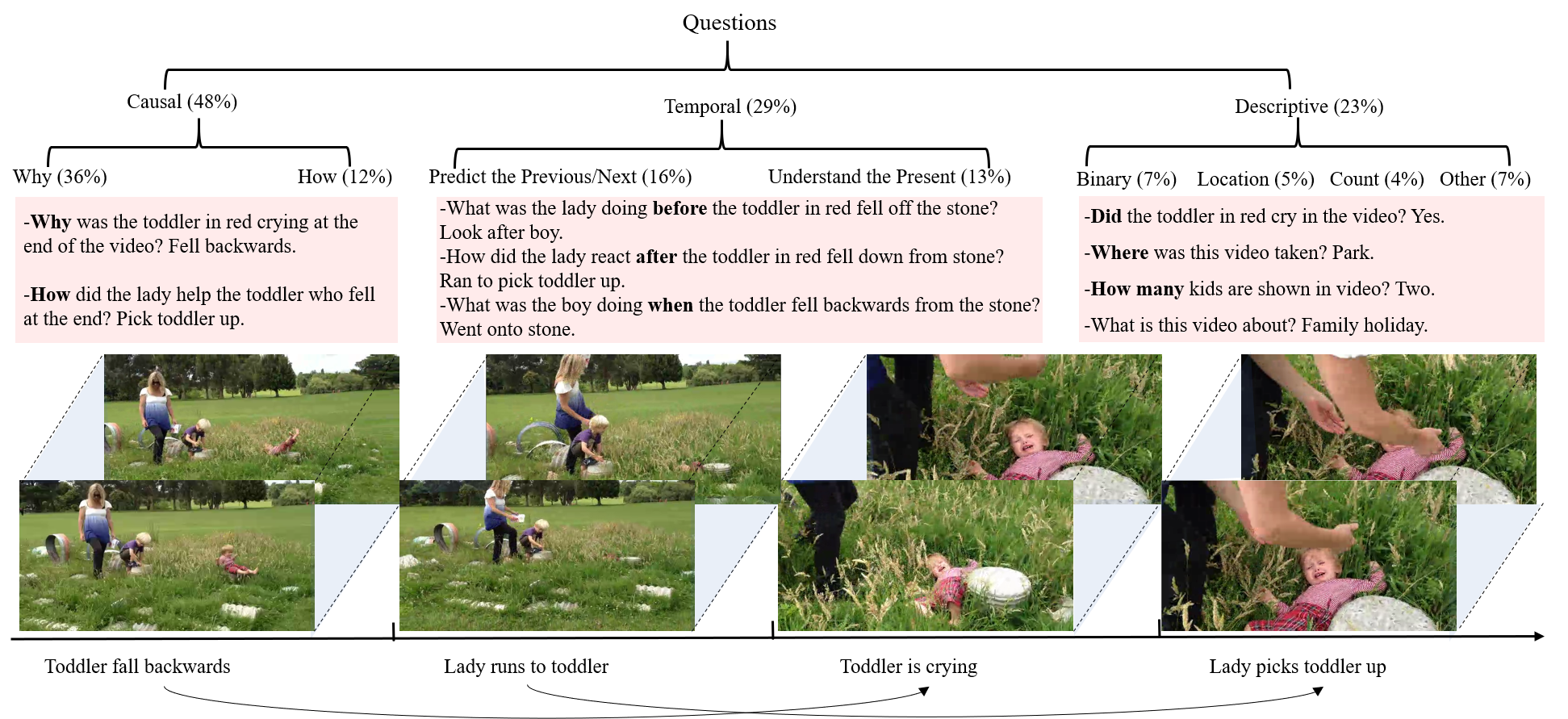

Actions in videos are often not independent but rather related with causal and temporal relationships. For example, in the video shown below, a toddler cries because he falls, and a lady runs to the toddler in order to pick him up. Recognizing the objects “toddler”, “lady” and describing the independent action contents like “a toddler is crying” and “a lady picks the toddler up” in a video are now possible with advanced neural network models. Yet being able to reason about their causal and temporal relations and answer natural language questions (e.g., “Why is the toddler crying?”, “How did the lady react after the toddler fell?”), which lies at the core of human intelligence, remains a great challenge for computational models and is also much less explored by existing video understanding tasks. NExT-QA is proposed to benchmark such a problem; it hosts causal and temporal action reasoning in video question answering and it is also rich in multi-object interactions in daily activities.

Data Statistics

NExT-QA contains a total of 5440 videos with average length of 44s and about 52K manually annotated question-answer pairs grouped into causal (48%),temporal (29%) and descriptive (23%) questions. We specially annotate for each video about 10 questions covering different kinds of contents. The dataset are split into train/val/test: 3870/570/1000, in which the 1000 test videos areOpen-ended QA

37,523

5,343

9,178

52,044

Download

News: please refer to our Github Data Preparation section for downloading.

Citation

@InProceedings{xiao2021next,

author = {Xiao, Junbin and Shang, Xindi and Yao, Angela and Chua, Tat-Seng},

title = {NExT-QA: Next Phase of Question-Answering to Explaining Temporal Actions},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2021},

pages = {9777-9786}

}

Acknowledgement

We appreciate the help from Dr.Tao Zhulin's group (CUC) when constructing this page.